In 1968, West Churchman wrote, “…there is a good deal of turmoil about the manner in which our society is run. …the citizen has begun to suspect that the people who make major decisions that affect our lives don’t know what they are doing.”[1] Churchman was writing at a time of growing concern about war, civil rights, and the environment. Almost fifty years later, these concerns remain, and we have more reason than ever “to suspect that the people who make major decisions that affect our lives don’t know what they are doing.” Examples abound.

In the 2012 United States presidential election, out of eight Republican party contenders, only Jon Huntsman unequivocally acknowledged evolution and global warming.[2] While a couple of the candidates may actually be anti-science, what is more troubling is that almost all the candidates felt obliged to distance themselves from science, because a significant portion of the U.S. electorate does not accept science. This fact suggests a tremendous failing of education, at least in the U.S.

But even many highly educated leaders do not understand simple systems principles. Alan Greenspan, vaunted Chairman of the U.S. Federal Reserve Board of Governors, has a PhD in economics; yet he does not believe markets need to be regulated in order to ensure their stability. After the financial disaster of 2008, Greenspan testified to Congress, “Those of us who have looked to the self-interest of lending institutions to protect shareholders’ equity, myself included, are in a state of shocked disbelief.”[3] Despite familiarity with the long history of bubbles, collapses, and self-dealing in markets, Greenspan expected people whose bonuses are tied to quarterly profits would act in the long-term interest of their neighbors. Like many Libertarians, Greenspan relies on the dogma of Ayn Rand, rather than asking if systems models (models of stability, disturbance, and regulation), like those described by James Clerk Maxwell in his famous 1868 paper, “On Governors,”[4] might be needed in economic and political systems.

Misunderstanding of regulation moved from the fringe right to national policy, when Ronald Reagan was elected President of the United States, convincing voters that “Government is not the solution to our problems; government is the problem.”[5] Reagan forgot that (under the U.S. system) “we, the people,” are the government. Reagan forgot the purpose of the U.S. government: “to form a more perfect Union, establish Justice, ensure domestic Tranquility, provide for the common defense, promote the general Welfare, and secure the Blessings of Liberty to ourselves and our Posterity…” that is, to create stability.[6] And Reagan forgot that any state—*any system*—without government is by definition unstable, inherently chaotic, and quite literally out-of-control. We need to remember that “government” simply means “steering” and that its root, the Greek work *kybernetes*, is also the root of cybernetics, the study of feedback systems and regulation.

Churchman points out that decision makers “don’t know what they are doing,” because they lack “adequate basis to judge effects.” It is not stupidity. It is a sort of illiteracy. It is a symptom that *something is missing* in public discourse and in our schools.

We need *systems literacy*—in decision makers and in the general public.

Since, Maxwell’s 1868 paper, a body of knowledge about systems has grown; yet schools largely ignore it. Our body of knowledge about systems should be codified and extended. And it should be taught in schools, particularly schools of design, public policy, and business management, but also in general college education and even in kindergarten through high school, just as we teach language and math at all levels.

**Why do we need systems literacy?**

Russell Ackoff put it well, “Managers are not confronted with problems that are independent of each other, but with dynamic situations that consist of complex systems of changing problems that interact with each other. I call such situations *messes*.”[7] Horst Rittel called them “wicked problems.”[8]

No matter what we call them, most of the challenges that really matter involve systems, for example, energy and global warming; water, food, and population; and health and social justice. And in the day- to-day world of business, new products that create high value almost all involve systems, too, for example, Alibaba and Amazon; Facebook and Google; and Apple and Samsung.

For the public as well as for designers, planners, and managers, part of the difficulty is that these systems are complex (made of many parts, richly connected), dynamic (growing and interacting with the world), and probabilistic (easily disturbed and partly self-regulating—not chaotic, but not entirely predictable).

The difficulty is compounded because the systems at the core of challenges-that-really-matter may not appear as “wholes”. Unlike say an engine or a dog or even a tornado, they may be hard to see all at once. They are often dispersed in space, and their “system-ness” is experienced only over time, often rendering them almost invisible. In some cases, we may live *within* these systems, seeing only a few individual parts, making the whole easy-to-overlook.

We might call these “hidden” systems (or translucent systems), for example, natural systems (the water cycle, weather, and ecologies); social systems (languages, laws, and organizations); information systems (operating systems, DNS, and cloud-based services); and hybrids (local health-care systems and education systems).

Understanding these systems is a challenge. Water travels continuously through a cycle. Carbon also travels through a cycle. These cycles interact with each other and with other systems. Sometimes, large quantities (stocks) can be tied up (sequestered) so that they are not traveling through the cycles. Large changes in stock levels (sequestering or releasing water or carbon) affect climate as ice or carbon dioxide interacts with the planet’s oceans and weather.

In sum: We face the difficulties of untangling messes (taming wicked problems) and fostering innovation (economic and social), which require understanding systems—which are complex, dynamic, and probabilistic—and “hidden” or “translucent”.

What is more: systems are “observed”. As Humberto Maturana noted in his Theorem Number 1, “Anything said is said by an observer.”[9] Or as Starfford Beer put it, “a system is not something given in nature,” it is something we define—even as we interact with it.[10]

Heinz von Foerster built on Maturana’s theorem with his Corollary Number 1, “Anything said is said to an observer.” What the observer “says” is a description, said to another observer in a language (they “share”), creating a connection that forms the basis for a society.[11]

Now, we can ask a seemingly simple question: How should we describe systems?

Or more precisely, how should we describe systems that are complex, dynamic, probabilistic, “hidden, and “observed”? In other words, we can ask: What is systems literacy?

**What is systems literacy?**

Churchman outlined four approaches to systems: 1) The approach of the *efficiency expert* (reducing time and cost); 2) The approach of the *scientist* (building models, often with mathematics); 3) The approach of the *humanist* (looking to our values); and 4) The approach of the *anti-planner* (accepting systems and living within them, without trying to control them).[12] We might also consider a fifth approach: 5) The approach of the *designer*, which in many respects is also the approach of the policy planner and the business manager, (prototyping and iterating systems or representations of systems).

Basic systems literacy (at least for designers, planners, and managers), includes three types of knowledge: 1) a systems *vocabulary*, (the “content” of systems literacy, that is, command of a set of distinctions and entailments or relationships related to systems); 2) systems *reading* skills, (skills of analysis, for recognizing common patterns in specific situations, e.g., identifying—finding and naming—a feedback loop); and 3) systems *writing* skills, (skills of synthesis, for understanding and describing existing systems and for imagining and describing new systems).

Basic systems literacy should also be enriched with study of 1) the *literature* of systems (a canon of key works of theory and criticism); 2) a *history* of systems thinking (context, sources, and development of key ideas); and 3) *connections* (influences of systems thinking on other disciplines and vice versa, e.g., design methods and management science).

A good working vocabulary in systems includes around 150 terms. It begins with learning:

system, environment, boundary

process, transform function

stocks, flows, delay (lag)

source, sink

information (signal, message)

open-loop, closed-loop

goal (threshold, set-point)

feedback, feed-forward

positive feedback, negative feedback

reinforcing, dampening

viscous cycle, virtuous cycle

circular processes, circularity, resource cycle

explosion, collapse, oscillation (hunting)

stability, invariant organization

balancing, dynamic equilibrium, homeostasis

tragedy of the commons

As students progress, they learn:

behavior (action, task), measurement

range, resolution, frequency

sensor, comparator, actuator (effector)

servo-mechanism, governor

current state, desired state

error, detection, correction

disturbances, responses

controlled variable, command signal

control, communication

teleology, purpose

goal-directed, self-regulating

co-ordination, regulation

static, dynamic

first order, second order

essential variables

variety, “requisite variety”

transformation (table)

More advanced students learn:

dissipative system

emergence

autopoiesis

constructivism

recursion

observer, observed

controller, controlled

agreement, (mis-)understanding

“an agreement over an understanding”

learning, conversation

bio-cost, bio-gain

back-talk

structure, organization

co-evolution, drift

black box

explanatory principle

“organizational closure”

self-reference, reflexive

ethical imperative

structural coupling

“consensual co-ordination of consensual co-ordination”

“conservation of a manner of living”

The vocabulary of systems is closely tied to a set of structural and functional configurations— common patterns that recur in specific systems across a wide range of domains. Looking at a specific system, recognizing the underlying pattern, and describing the general pattern in terms of the specific system constitutes command of the vocabulary of systems, reading systems, and writing systems—that is, systems literacy. A person with basic systems literacy should be fluent with these patterns: resource flows and cycles; transform functions (processes); feedback loops (both positive and negative); feed-forward; requisite variety (meeting disturbances within a specified range); second-order feedback (learning systems); and goal-action trees (or webs).

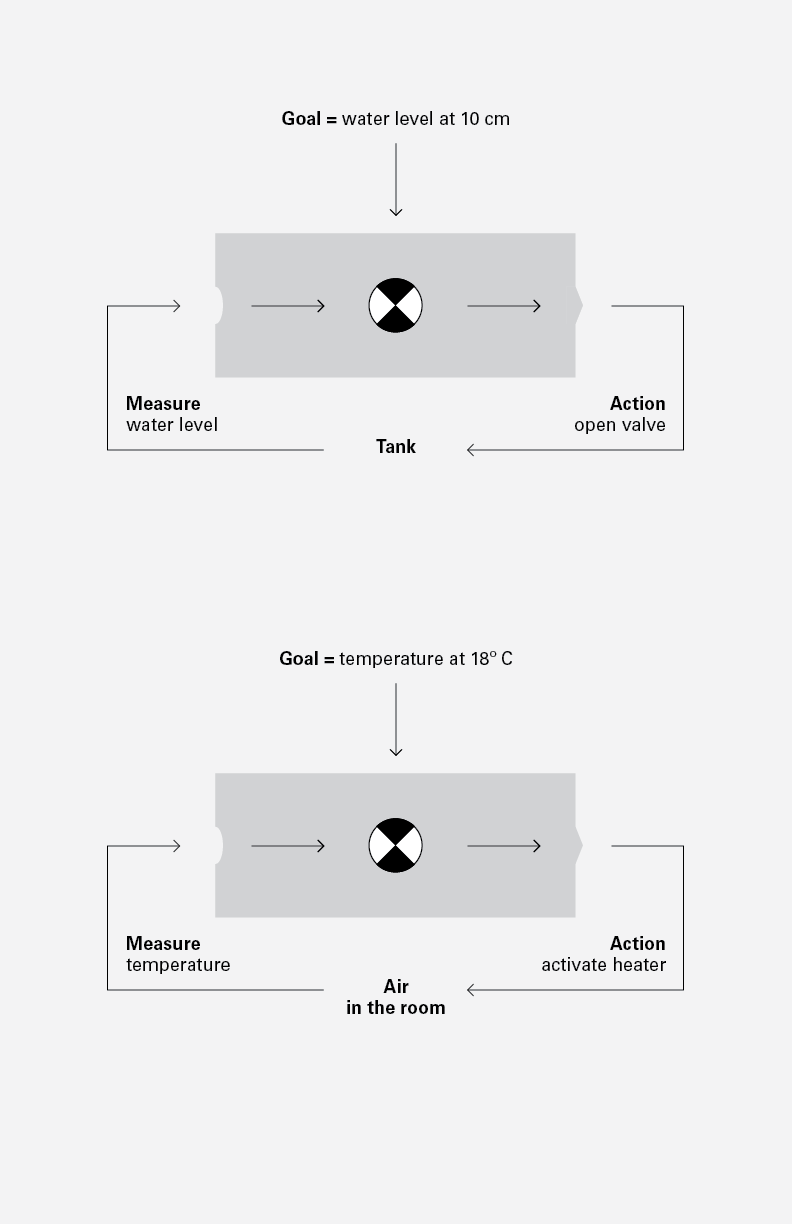

An example may help. Consider a toilet and a thermostat, quite different in form, mechanism, and domain. The first deals with water and waste; while the second deals with energy and heat. Yet the toilet and thermostat are virtually the same in function. Both are governors. The first governs the water level in a cistern; while the second governs temperature in a room. Each system measures a significant variable, compares it to a set-point, and if the measurement is below the set point, the system activates a mechanism to increase the water level or the temperature until the set point is reached. The underlying general pattern is a negative feedback loop. That’s what makes a governor a governor. Recognizing the negative feedback loop pattern is a mark of systems literacy.

This diagram describes the general form of a negative feedback loop. It applies to toilets, thermostats, and other governors.

Text can describe a system’s function, linking it to a common pattern. But text descriptions require mental gymnastics from readers—*imagining* both the behavior of the system and the abstract functional pattern—*and* then linking the two. Images of physical systems aid readers, though behavior can be difficult to depict.

Functions are often represented in diagrams with some degree of formalism. Learning to read and write one or more systems function formalism is an important part of systems literacy. Donella Meadows has a particular formalism. O’Connor & McDermott have another formalism. Otto Mayr has a block diagram formalism. Yet in many cases, simple concept maps may be all the formalism required.

The value of rendering—of making visible—the often invisible functioning of systems can be quite high for teams who are developing and managing new products and services. Mapping systems can uncover differences in mental models, create shared understanding, and point to opportunities for improvement and other insights. In short, systems literacy can help us manage messes.

**How do we achieve systems literacy?**

Teaching systems in design school is not a new idea. Hochschule für Gestaltung (HfG) Ulm offered courses in operations research and cybernetics in the early 1960s.

Today, all graduate design programs should have courses in systems literacy—as should undergraduate programs in emerging fields (such as information design, interaction design, and service design) and cross-disciplinary programs (such as programs in innovation, social entrepreneurship, and design studies). Even traditional design programs (such as product design, communication design, and architecture) would benefit from courses in systems literacy, especially as their students begin to grapple with an increasingly networked world.

A few design schools ask students to read Donella Meadows’ book *Thinking in Systems*. (often with little discussion and no exercises). Still, reading Meadows is a good start. But Meadows represents only one lens, the systems dynamics lens of “resource stocks and their flows.” Meadows only briefly touches on regulation and feedback; she does not fully address systems as “information flows;” and she ignores second-order systems and related topics, such as learning and conversation.

One course, 3 hours per week for 15 weeks is a bare minimum for a survey of systems thinking. Ideal would be three, semester-long courses:

**1) Introduction to Systems**

(covering systems dynamics, regulation, and requisite variety—with readings including Capra’s new *A Systems View of Life*, Meadows’ *Thinking in Systems*, and Ashby’s *An Introduction to Cybernetics*);

**2) Second-Order Systems**

(covering observing systems, autopoiesis, learning, and ethics—with readings including Glanville’s “Second-order Cybernetics,” von Foerster’s “Ethics and Second-order Cybernetics,” and Maturana + Davila’s “Systemic and Meta Systemic Laws”); and

**3) Systems for Conversation**

(covering co-evolution, co-ordination, and collaboration—with readings including, Pangaro’s “What is conversation?,” Pask’s “The Limits of Togetherness,” Beer’s *Decision and Control*, and Maturana’s “Meta-design”).

Learning systems literacy is like learning a new language. Very few people can learn Spanish simply by reading a book about it. Even learning a new programming language like Ruby is aided by experimentation; that is the purpose of writing hello-world programs and similar introductory exercises. Practice and immersion are also very important in learning a new language. And so it is for systems literacy. Thus, systems literacy courses should be organized to combine reading papers and books (and discussing them) with making artifacts (and discussing them)—in a format that blends seminar and studio.

A class might begin by examining the front page of any newspaper to identify systems that are mentioned that day. Students might work in pairs or small teams to quickly map a system. Presentation and discussion of the maps creates opportunities to talk about mapping techniques, underlying structures, and common patterns.

Reviewing common patterns (via canonical diagrams) is an important part of any systems literacy course. Students should participate in in-class exercises to apply the patterns to specific systems suggested by the teacher. Then, as homework, students should again apply the patterns to systems they identify, creating their own system maps. In the next meeting, an in-class presentation and critique of the homework provides an opportunity for students to see many examples of specific systems that share a common pattern.

The material can be reinforced by a final project to design a new system or repair (or improve) a faulty one, using the vocabulary and common patterns learned earlier in the course.

**Implications of (and for) observing systems**

Many designers worry that defining a set of knowledge about design risks undermining what is special about designing—that being rigorous and specific will turn design into engineering or science. But teaching vocabulary and grammar does not deny poetry. Quite the contrary; a knowledge of vocabulary and grammar, if not a prerequisite, seems at least a more fertile ground for the emergence of poetry.

As Harold Nelson and Erik Stolterman point out, “Designers need to be able to observe, describe, and understand the context and environment of the design situation… a designer is obliged to use whatever approaches provide the best possible understanding of reality…”[13] Systems literacy seems an obvious prerequisite for those who will be designing and managing systems.

Still, some designers see systems thinking as “mere calculation.” That misses the roots systems thinking has in biology, sociology, and cognitive science. It also misses the deep concern for ethics explicitly evidenced by important systems thinkers. This concern is particularly marked in regard to personal responsibility.

“Pask… distinguishes two orders of analysis. The one in which the observer enters the system by stipulating the system’s purpose… [the other] by stipulating his own purpose. …[and because he can stipulate his own purpose] he is autonomous… [responsible for] his own actions…”[14]

Maturana echos the same theme, “…if we know that the reality that we live arises through our emotioning …we shall be able to act according to our awareness of our liking or not liking the reality… That is, we shall become responsible for what we do.”

Maturana goes on to point out that we are responsible for our language, our technology, and the world in which we live. “We human beings can do whatever we imagine… But we do not have to do all that we imagine, we can chose, and it is there where our behavior as socially conscious human beings matters.”[15]

We have a responsibility to try to make things better. If we want decision makers “to have a basis to judge the effects of their decisions,” or if we acknowledge that almost all the challenges that really matter—and most of the opportunities for social and economic innovation—involve systems, and if we know that we have available to us tools to help us think about systems, then we must put those tools into circulation. We must build systems literacy. To not do so would be irresponsible.

**Endnotes**

1: Churchman, C. West, *The Systems Approach*, Delacorte Press, New York, 1968.

2: Dade, Cory, “In Their Own Words: GOP Candidates And Science,” NPR, September 07, 2011 [http://www.npr.org/2011/09/07/140071973/in-their-own-words-gop-candidates-and-science](http://www.npr.org/2011/09/07/140071973/in-their-own-words-gop-candidates-and-science)

3: As quoted by The New York Times, October 23, 2008, [http://www.nytimes.com/2008/10/24/business/economy/24panel.html](http://www.nytimes.com/2008/10/24/business/economy/24panel.html)

4: Maxwell, James Clerk, “On Governors,” Proceedings of the Royal Society, No. 100, 1868.

5: Reagan, Ronald, “Inaugural Address,” Washington, D.C., January 20, 1981. [http://www.reaganfoundation.org/pdf/Inaugural_Address_012081.pdf](http://www.reaganfoundation.org/pdf/Inaugural_Address_012081.pdf)

6: “Constitution of the United States,” Philadelphia, 1787. [http://www.archives.gov/exhibits/charters/constitution_transcript.html](http://www.archives.gov/exhibits/charters/constitution_transcript.html)

7: Ackoff, Russell, “The Future of Operational Research,” *The Journal of the Operational Research Society*, Vol. 30, No. 2, pp. 93-104, February, 1979.

8: Rittel, Horst, “Dilemmas in a General Theory of Planning.” Panel on Policy Sciences, American Association for the Advancement of Science. 4 (1969): 155–169.

9: Maturana, Humberto, and Devila, Ximena, “Systemic and Meta-systemic Laws,” ACM *Interactions*, Volume XX.3, May + June 2013. (Law 1 originally published in 1970.)

10: Beer, Stafford, *Decision and Control: The Meaning of Operational Research and Management Cybernetics*, John Wiley & Sons, New York, 1966.

11: von Foerster, Heinz, “Cybernetics of Cybernetics,” 1979, in *Understanding Understanding*, Springer, New York 2003.

12: Churchman, op. cit.

13: Nelson, Harold, and Stolterman, Erik, *The Design Way*, MIT Press, Cambridge, 2012.

14: Pask as quoted by von Foerster, op. cit.

15: Maturana, Humberto, “Meta-Design,” 1997. [http://www.inteco.cl/articulos/006/texto_ing.htm](http://www.inteco.cl/articulos/006/texto_ing.htm)

Download the slide presentation which accompanies this article.

1 Comment

JN

Jan 8, 2021

2:57 pm

What are some examples of patterns and canonical diagrams as mentioned above in the line “ Reviewing common patterns (via canonical diagrams) is an important part of any systems literacy course. ”